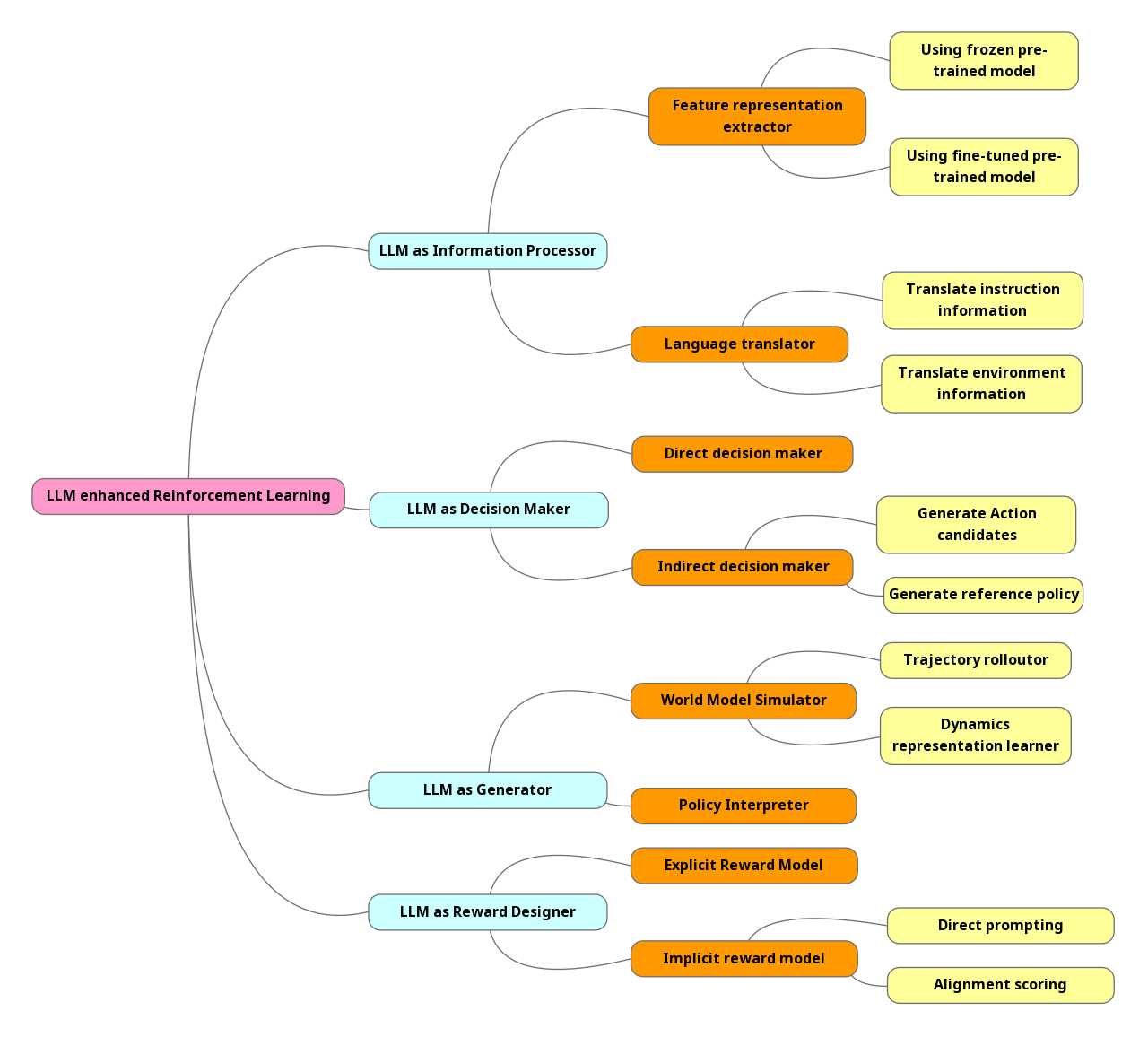

There are various ways in large language model enhanced reinforcement learning.

LLM as information processor

Feature representation extractor

- Directly use frozen pre-trained model to extract embeddings from observation.

[1] uses frozen pre-trained language transformer to extract history representation and compression. Mapping previous observations to a compressed representation and then concatenate it with current observation. - Using contrastive learning to fine-tune the pre-trained model to learn an invariant feature representation, improving the generalization capability. The key idea is to learn the representations by contrasting positive examples against negatives.

- Directly use frozen pre-trained model to extract embeddings from observation.

Language translator

Motivation: Using LLM transforms natural language information into formal task-specific languages, assisting learning process of RL agent.- Instruction information translation:

Translate natural language-based instructions to task-related unique language. [2] proposes an inside out scheme, preventing policies from directly exposed to natural language instructions and improving the efficiency of policy learning. - Environment information translation:

Translate natural language environment information into formal domain-specific language. (convert natural language sentences into grounded usable knowledge for the agent).

[3]introduce RLang, it is a grounded formal language that can express the information about all components of a task (policy, reward, plans and transition function).

- Instruction information translation:

Large language model as reward designer

Implicit reward model

Key idea: LLM provides auxiliary or overall reward value based on the understanding of task objectives and observations.- Direct prompting:

[4] considers LLM as proxy reward function. Prompting LLM with some examples of desirable behaviors and the preferences description of the desired behaviors.

[5] introduce a framework that provides interactive rewards mimicking human feedback based on LLMs’ real-time understanding of the agent’s behavior. By designing two prompts, one let LLM understand scenario and the other one instruct LLM about evaluation criterions. - Alignment scoring:

In computer vision, some literatures use VLM as reward model to align multi-model data and calculate cosine similarity between visual state embeddings and language description embeddings.

- Direct prompting:

Explicit Reward Model

Key idea: Using LLMs generates executable codes that explicitly specify the details of the calculation process.

Strengths: transparently reflects the reasoning and logical process of LLMs and thus is readable for humans to further evaluate and optimize.- [6]defines lower-level reward parameters based on high-level instructions.

[7, 8] use self-refinement mechanism for automated reward function design, including initial design, evaluation and self-refinement loop.

[9] develops a reward optimization algorithm with self-reflection, which leverages environment source code and task description to sample reward function candidates from a coding LLM, and evaluate them over RL simulation.

[10] uses the similar idea comparing with [9], generates shaped dense reward functions as executable programs based on the environment description. It executes the learned policy in the environment, requesting human feedback and refining the reward accordingly.

- [6]defines lower-level reward parameters based on high-level instructions.

Large language model as decision maker

- Motivation: the power of LLMs. Decision Transformer [11] show great potential in offline RL when dealing with sparse-reward and long-horizon tasks [12] while LLMs are large scale Transformer-based model.

- Direct decision maker

It directly predicts the actions from the perspective of sequence modeling. The objective is to minimize the square error of actions. There is a lot of work in this area:

[13] uses pre-trained LLM as general model for task-specific model learning across different environment by adding the goals along with observations as input and convert them into sequential data.

The key idea of [14] is to leverage captions describing the next subgoals before do actions, improving the performance of reasoning policy.

[15] employ pre-trained LLMs with LoRA fine-tuning method that augments the pre-trained knowledge with in-domain knowledge. - Indirect decision maker

Key ideas: LLMs instruct action choices by generating action candidates or provide reference policy, addressing the challenge like enormous action spaces.- Action candidates

To solve the problem that for any given state, only a small fraction of actions can be accessed. [16] proposes a framework to generate a set of action candidates for each state by training the language models based on game history.

[17] is the extension of [16] in the field of robotic. The agent integrates LLM to generate action planning and execute low-level skills. Different from [16], the LLM is not trained and the action candidates are generated based on prompting with the history and current observation. - Reference policy:

LLMs provide a reference policy, then policy updating process is modified based on it. While, the strategy for model convergence may not be quite the same as human preferences.

[18] uses LLMs to generate prior policy based on human instructions and use this prior to regularize the objective of RL.

- Action candidates

- Direct decision maker

Large language model as Generator

- World Model Simulator

Motivation: In order to apply RL to risk data-collection process or real world- Trajectory rolloutor:

Use LLMs to synthesize trajectory. - Dynamics representation learner:

Using representation learning techniques, the latent representation of the future can be learned to assist decision-making.

- Trajectory rolloutor:

- Policy Interpreter

Objective: Explain the decision-making process of learning agent.

Using the trajectory history of states and actions as context information, LLMs can generate interpretations for current policy.

Reference:

[1] F. Paischer et al., “History compression via language models in reinforcement learning,” in International Conference on Machine Learning, 2022: PMLR, pp. 17156-17185.

[2] J.-C. Pang, X.-Y. Yang, S.-H. Yang, and Y. Yu, “Natural Language-conditioned Reinforcement Learning with Inside-out Task Language Development and Translation,” arXiv preprint arXiv:2302.09368, 2023.

[3] B. A. Spiegel, Z. Yang, W. Jurayj, K. Ta, S. Tellex, and G. Konidaris, “Informing Reinforcement Learning Agents by Grounding Natural Language to Markov Decision Processes.”

[4] M. Kwon, S. M. Xie, K. Bullard, and D. Sadigh, “Reward design with language models,” arXiv preprint arXiv:2303.00001, 2023.

[5] K. Chu, X. Zhao, C. Weber, M. Li, and S. Wermter, “Accelerating Reinforcement Learning of Robotic Manipulations via Feedback from Large Language Models,” arXiv preprint arXiv:2311.02379, 2023.

[6] W. Yu et al., “Language to rewards for robotic skill synthesis,” arXiv preprint arXiv:2306.08647, 2023.

[7] A. Madaan et al., “Self-refine: Iterative refinement with self-feedback,” Advances in Neural Information Processing Systems, vol. 36, 2024.

[8] J. Song, Z. Zhou, J. Liu, C. Fang, Z. Shu, and L. Ma, “Self-refined large language model as automated reward function designer for deep reinforcement learning in robotics,” arXiv preprint arXiv:2309.06687, 2023.

[9] Y. J. Ma et al., “Eureka: Human-level reward design via coding large language models,” arXiv preprint arXiv:2310.12931, 2023.

[10] T. Xie et al., “Text2Reward: Reward Shaping with Language Models for Reinforcement Learning.”

[11] M. Janner, Q. Li, and S. Levine, “Offline reinforcement learning as one big sequence modeling problem,” Advances in neural information processing systems, vol. 34, pp. 1273-1286, 2021.

[12] W. Li, H. Luo, Z. Lin, C. Zhang, Z. Lu, and D. Ye, “A survey on transformers in reinforcement learning,” arXiv preprint arXiv:2301.03044, 2023.

[13] S. Li et al., “Pre-trained language models for interactive decision-making,” Advances in Neural Information Processing Systems, vol. 35, pp. 31199-31212, 2022.

[14] L. Mezghani, P. Bojanowski, K. Alahari, and S. Sukhbaatar, “Think before you act: Unified policy for interleaving language reasoning with actions,” arXiv preprint arXiv:2304.11063, 2023.

[15] R. Shi, Y. Liu, Y. Ze, S. S. Du, and H. Xu, “Unleashing the Power of Pre-trained Language Models for Offline Reinforcement Learning,” arXiv preprint arXiv:2310.20587, 2023.

[16] S. Yao, R. Rao, M. Hausknecht, and K. Narasimhan, “Keep calm and explore: Language models for action generation in text-based games,” arXiv preprint arXiv:2010.02903, 2020.

[17] M. Ahn et al., “Do as i can, not as i say: Grounding language in robotic affordances,” arXiv preprint arXiv:2204.01691, 2022.

[18] H. Hu and D. Sadigh, “Language instructed reinforcement learning for human-ai coordination,” in International Conference on Machine Learning, 2023: PMLR, pp. 13584-13598.

[19] Y. Cao et al., “Survey on Large Language Model-Enhanced Reinforcement Learning: Concept, Taxonomy, and Methods,” arXiv preprint arXiv:2404.00282, 2024.